LG MAL

Shipped 2023

SaaS

End To End

♦︎

TL;DR

Driving Consistency & Efficiency with LG’s AI Content Verification Platform

ROLE

PRODUCT DESIGNER

TEAM

PM, ENGINEERS, TECHNICAL WRITERS

Duration

DEC 2023 - JUL 2024

PROBLEM

Awkward phrasing and grammar mistakes in the product UI weren’t caught before launch, causing users to lose trust in the product’s quality and damaging the overall brand experience.

SOLUTION

After the internal audit, I expanded the scope to design a new internal SaaS tool that lets teams search, verify, and store content to improve quality and consistency across the entire writing process.

IMPACT

The system caught 134 errors—nearly three times more than manual reviews—and cut revision time by 50%, proving its impact in improving content quality and delivery speed.

♦

CHALLENGE

“Feels like a dumb AI translated another language into English.”

Google user review

As LG aimed to build a stronger premium presence in the U.S., poor UX writing started showing up as a real problem. Awkward phrasing, grammar mistakes, and inconsistent formatting were frustrating users and making the products feel less polished than they should.

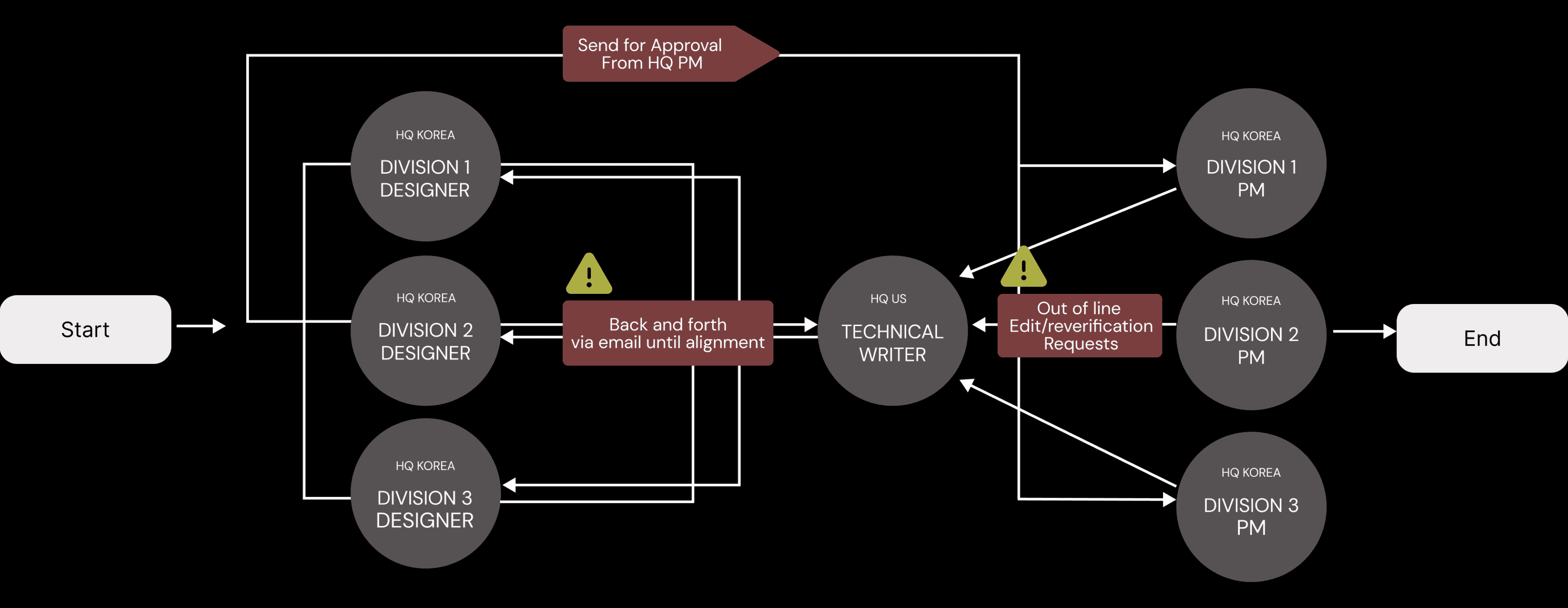

An internal audit revealed that the label creation and verification process was overly manual and complex, with fragmented workflows across global teams leading to frequent inconsistencies and quality gaps.

This led our team to ask:

“How might we make product content more consistent and clear, so users don’t feel confused or disappointed when using our products?”

♦

SOLUTION OVERVIEW

I set out to build a solution that improved user-facing content quality while making the process easier for internal teams.

The focus was on simplicity, with a clean, intuitive interface that anyone could understand at first glance while addressing three key needs our internal stakeholders emphasized: easy search, automated verification, and one unified space to store all guidelines and exceptions.

SEARCH

VERIFY

STORE

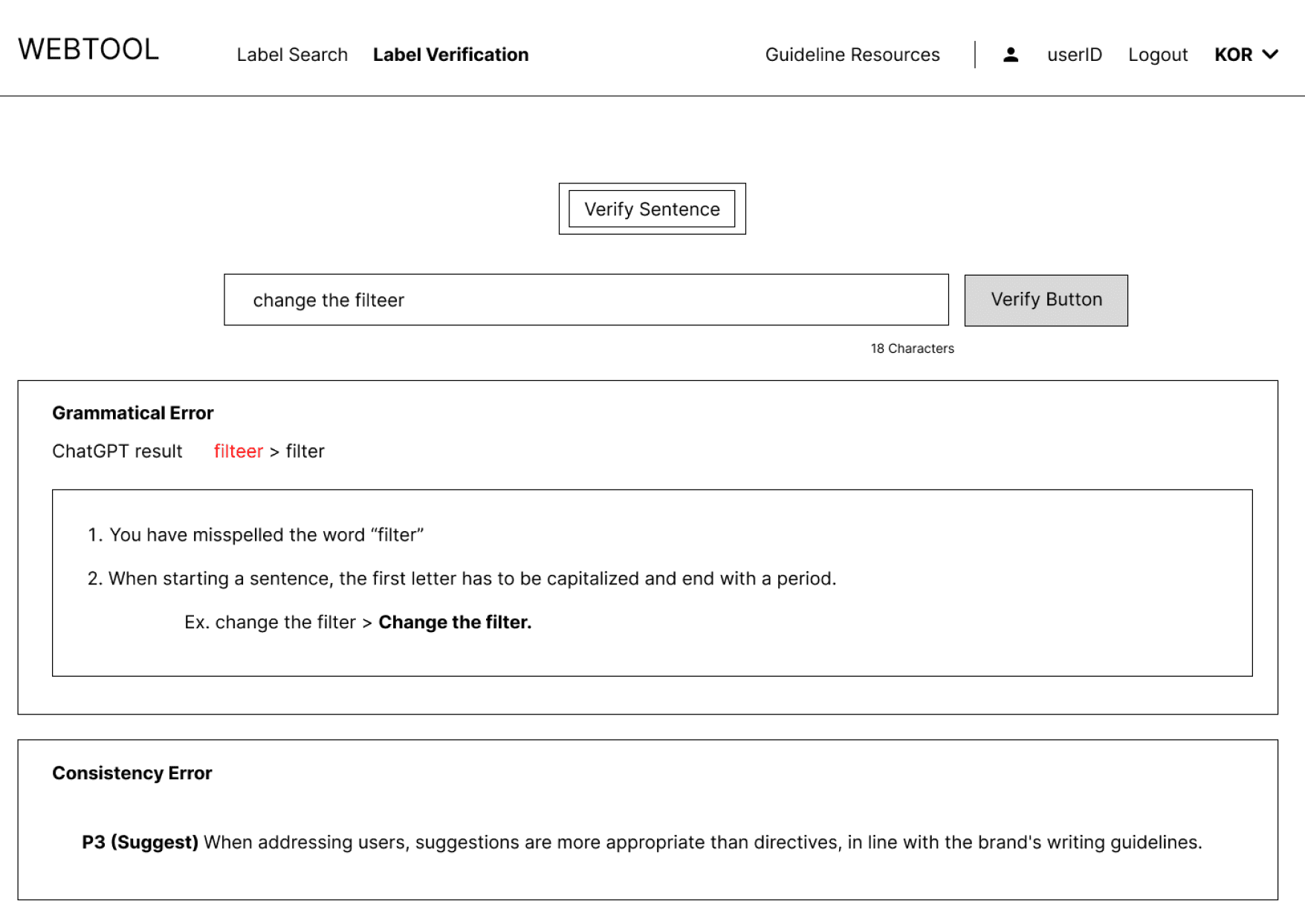

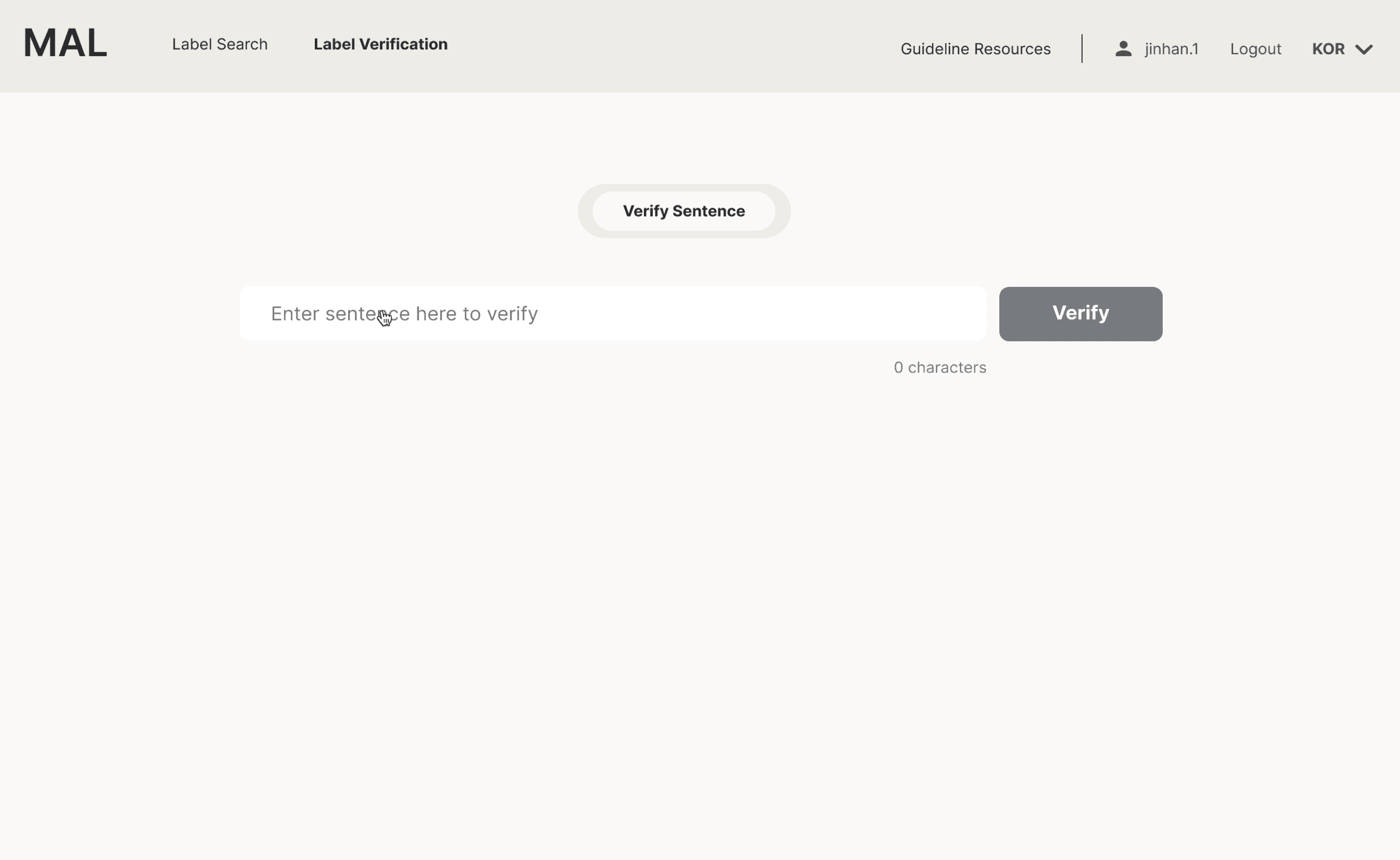

The Verify tab allows employees to review entire label, leveraging Busan University AI to catch minor grammar errors and ensure compliance with brand UX writing guidelines.

Employees can access the latest style guide across all HQ divisions in one platform, eliminating the need to track down document owners for product term details.

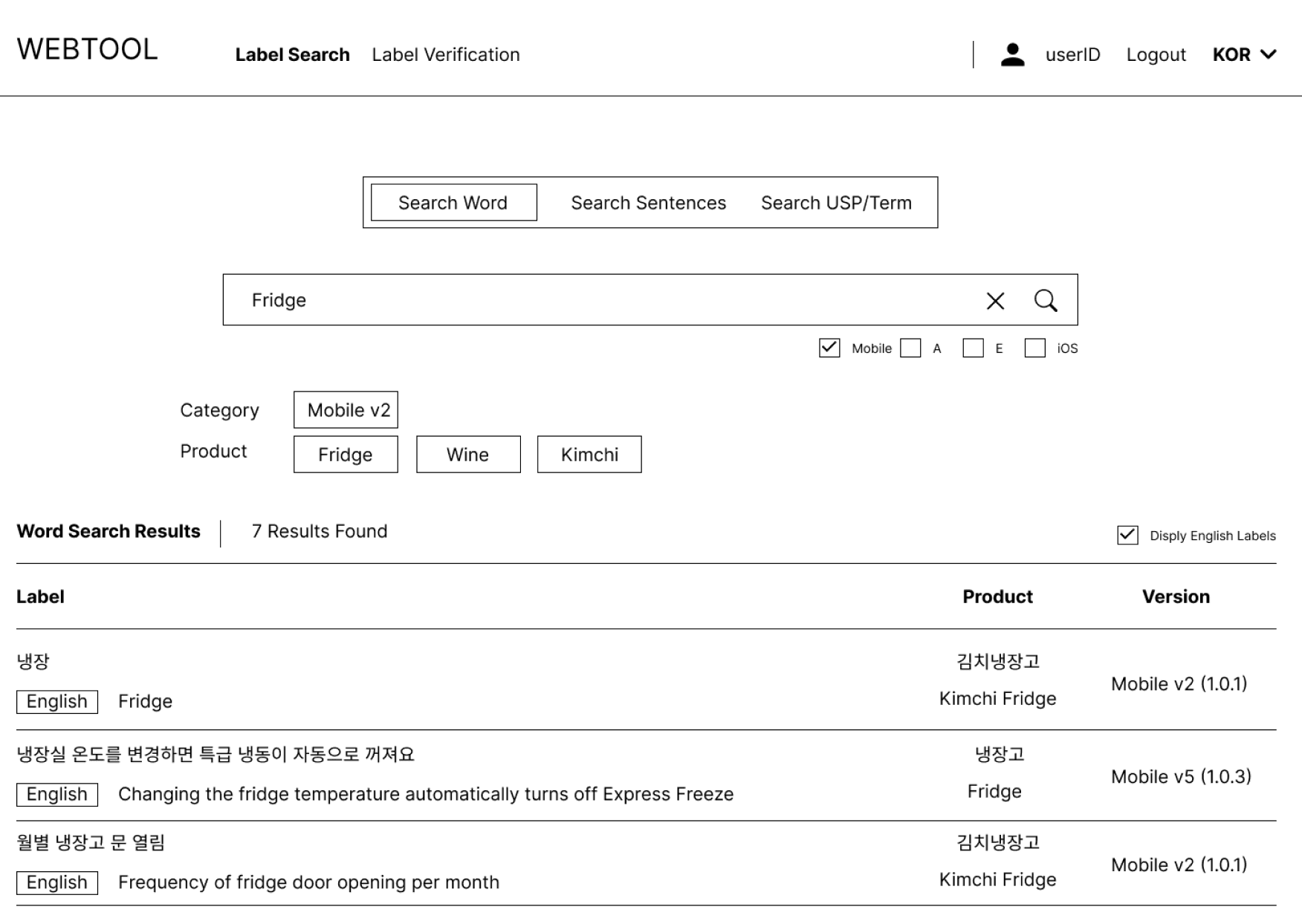

Employees can quickly look up past label examples by typing a word, phrase, or branded term, making it easy to stay consistent with brand standards and accurate terminology.

01.

RESEARCH

First, I needed to understand why so many label errors were slipping through and going unnoticed before product launch.

Interviewed

10 employees across LG Korea & US HQ

Shadowed technical writer in Chicago R&D to understand verification process.

Analyzed 300+ labels reported to have errors

Research revealed that...

Each department has their own set of rules and the new contents bounced between teams via email on separate excel attachments...often duplicating review cycles.

Looks crazy, I know.

The manual process came with other major side effects.

Many employees lacked English fluency but couldn’t use translation tools due to company policy, and past label references were buried in emails — hurting content quality and cross-team trust.

KOREA HQ

HEEJAE

Product Designer

“I’m not fluent in English so I want to look at past labels for reference because I worry about making grammar mistakes, but it’s really hard to find them.”

PAIN POINT

US HQ

MEGAN

Technical Writer

PAIN POINT

"I don't have time to version control my edits. I often end up sending contradictory changes, and it's really embarrassing."

KOREA HQ

JUNG HO

Product Manager

PAIN POINT

"Since different teams manage the label lists and own rules it’s hard for me to find the latest version for reference. "

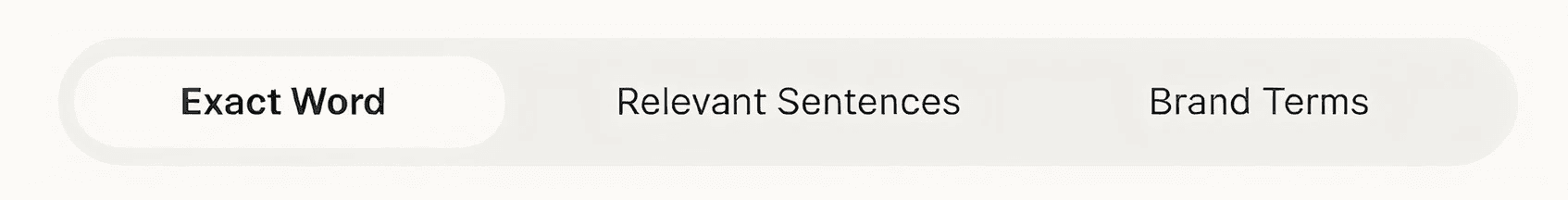

SEARCH

REFERENCE

VERIFY

Known Issues

Lack of Universal UX writing guidelines

Inconsistent label verification results

Access to past label examples

of similar products or services

New Issues

Language proficiency

No technical tools or support provided for consistent results

No access to past label examples

Manual verification process

affecting results consistency.

02.

SCOPE

It became clear that what we truly needed was a single source of truth, shared system that connected every contributor, no matter their team, role, or location.

I pushed to expand the project beyond a simple UX writing guideline update to design a new 0–1 verification tool to improve collaboration and efficiency across teams.

I wanted to address two critical gaps:

No universal guidelines or single source of truth: Collect, organize, and unify data from different teams into one shared system.

No single tool to support quality content creation: Build a simple platform employees can use to reference past work, automate verification steps, and manage everything related to label creation in one place.

Original Scope

Expanded Goal

UX writing guideline

0-1 Verification tool

Phase 1: Collect data and create universal UX writing guideline

Phase 2: Build verification tool MVP to test on internal stakeholders

03.

PHASE 1 : DEEP DIVE IN DATA

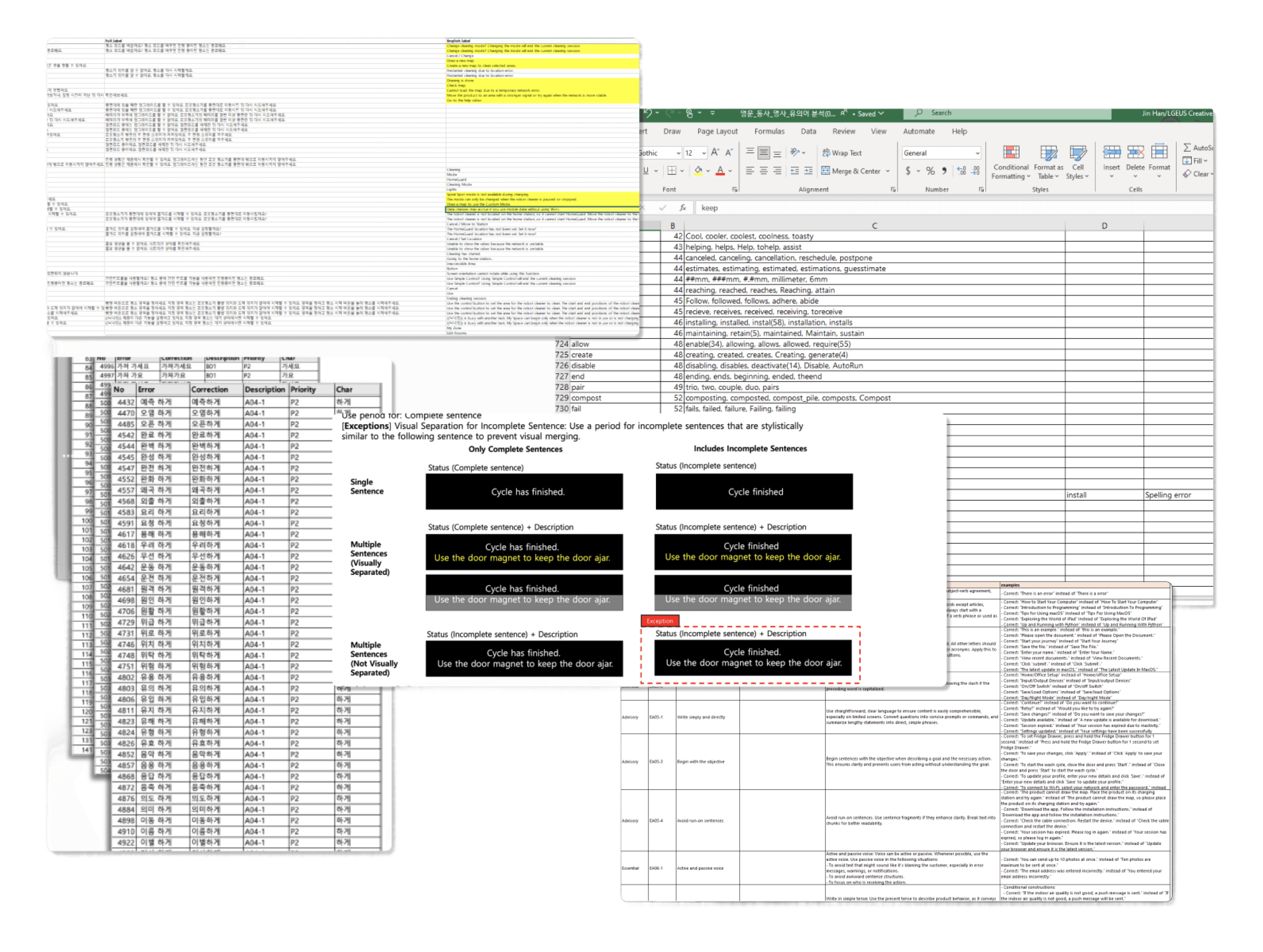

My first step was auditing LG’s legacy label database.

There were so many patchwork of inconsistent formats, tones, and even conflicting rules across divisions.

I led the effort with UX writers and global stakeholders to establish universal brand guidelines, unifying core standards while accommodating each division’s restrictions, before moving into design.

Once we collected all the datasets from different divisions and teams from Korea & US HQs, I partnered with engineers to structure the database into two parts:

Rule Data (the standards each label must meet)

Label Data (the content being verified)

LABEL DATABASE

MAL API

MAL WEB (Not ideated yet)

Collection of labels across the global teams

Collection of brand rules

Grammatical rules and

hierarchical rules based

on different languages

Collection of unique

guidelines for each

divisions

RULE DATABASE

04.

PHASE 2: NON-DISRUPTIVE DESIGN EXPLORATION

From stakeholder interviews, one theme was loud and clear...

“I don’t have time to learn another tool.”

The real challenge wasn’t building the tool — it was ensuring people would actually use it.

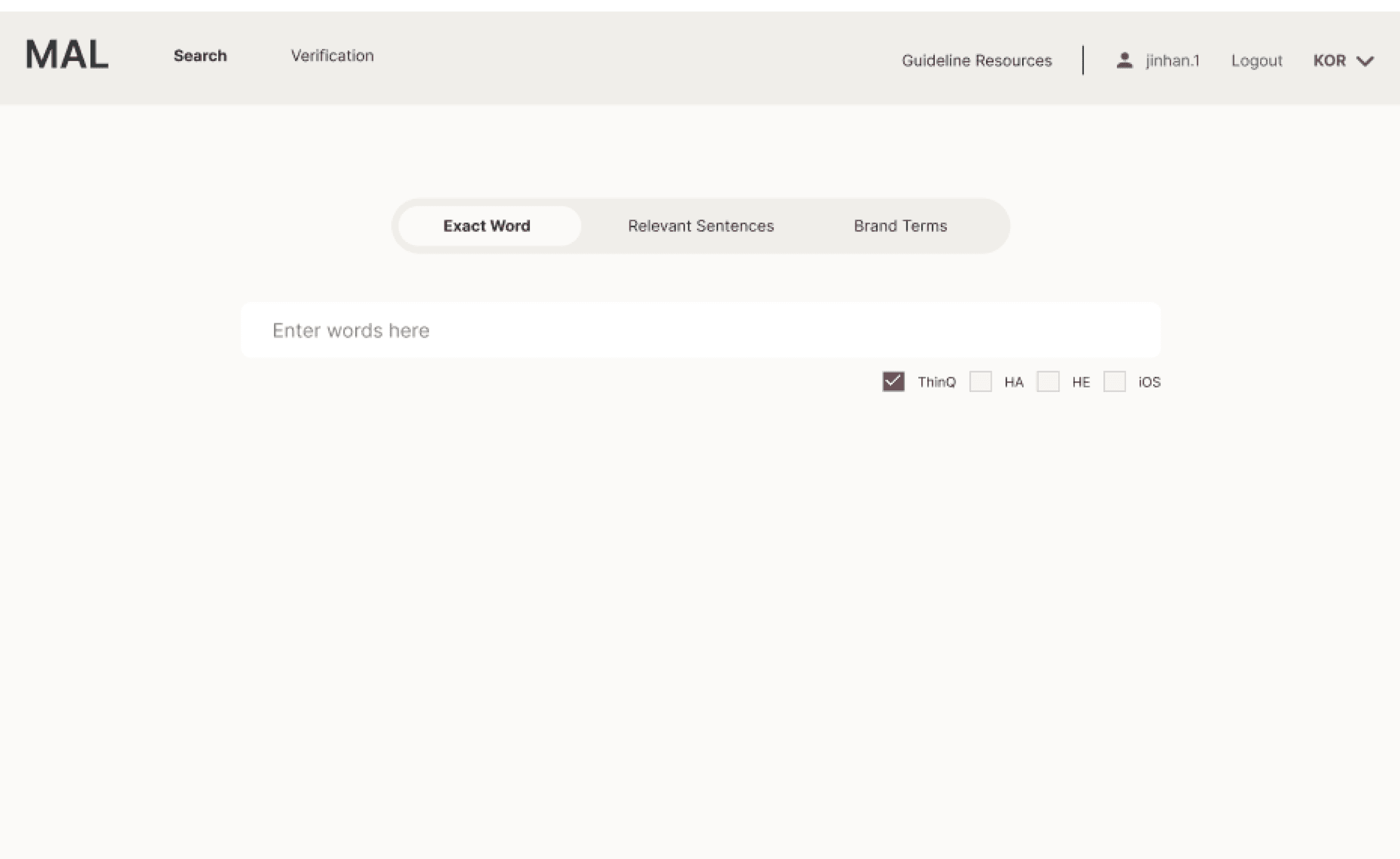

To drive adoption, I focused on simplicity and familiarity, designing an interface inspired by a search engine: one box, one primary action.

Based on the research insights, I created low-fidelity wire frames to align quickly with engineers on three core features — Search, Verification, and Guideline Storage.

We received approval for a 3-month pilot and had just four weeks to deliver a working MVP for key stakeholders to test its impact on workflow — so I moved quickly into high-fi design.

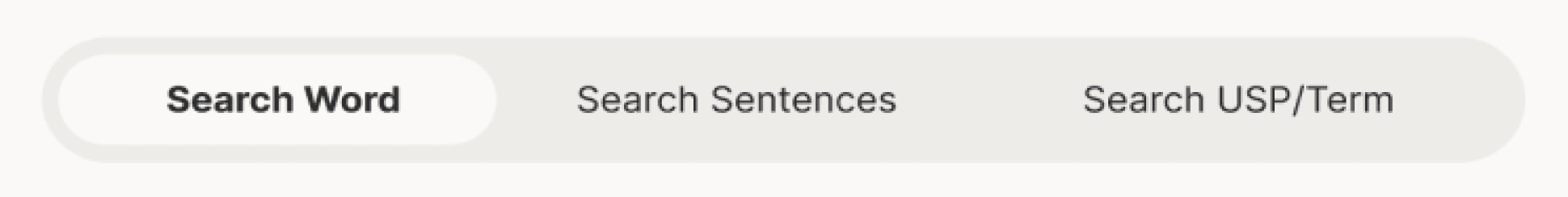

KEY FEATURE 1: SEARCH

KEY FEATURE 2: VERIFY

KEY FEATURE 3: GUIDELINE

With Search, users can instantly look up past label examples by entering a word, sentence, or branded term, helping them stay consistent with brand standards and proper usage.

The Verify, lets users review full labels in one view, using AI to flag grammar issues and check alignment with universal style guidelines.

With Guideline Storage, users can access approved reference materials from each HQ division, eliminating the need to contact document owners for clarification.

(Full guideline documents were excluded from the UI to meet compliance policies.)

05.

PILOT IMPACT

Even at the MVP stage, we saw a notable improvement in writing quality and workflow efficiency.

134 Error Detected

The system detected 134 errors — nearly 3× more than the 51 found through manual review.

380 Pre-ship Errors

In 2022, 37 errors were caught pre-shipment; in 2023, the pilot flagged 380, proving the system’s effectiveness.

06.

USER TESTING

We were getting closer to achieving our business goal — producing more consistent, high-quality content in less time — but we also wanted to ensure users were truly satisfied with the experience.

To validate this, we conducted user testing at both Korean and US HQs, uncovering key pain points and refining the design based on those insights.

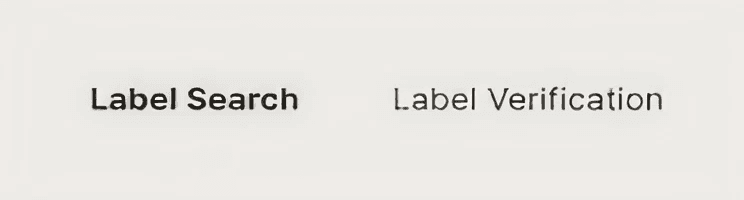

BEFORE

The word “label” misled users to think only long sentences were searchable, not single words or short phrases.

The term “USP/Term” was commonly used in Korea but unfamiliar to U.S. users, leading to confusion during testing.

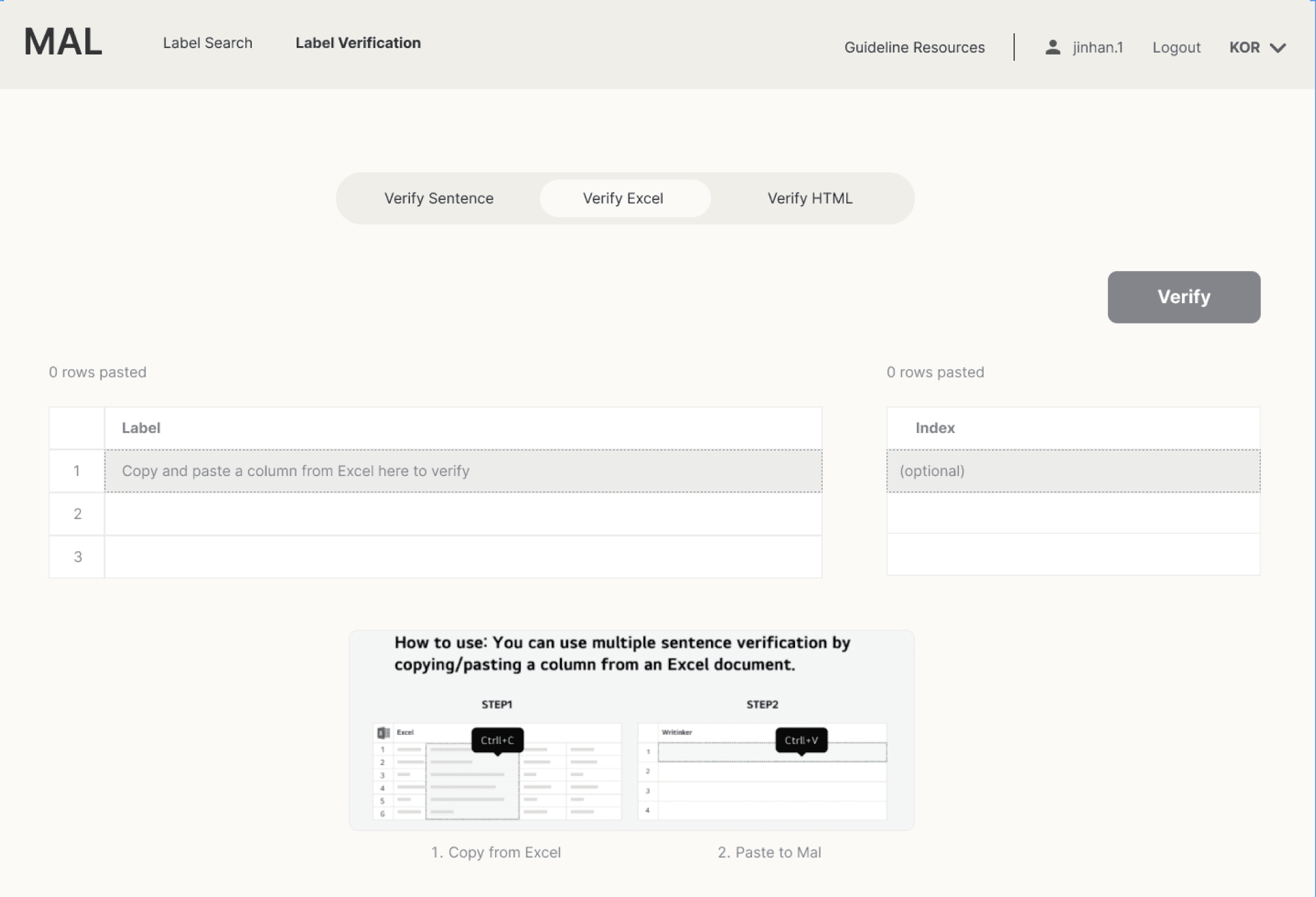

Mass Verification

During testing, Korea HQ users expressed the need for a faster way to verify multiple labels at once. We responded by adding bulk verification through Excel uploads and an HTML page verification tool, significantly improving workflow efficiency.

AFTER

Simplified the tab to make it more straightforward and easier to navigate.

Revised the wording to use plain, direct language that clearly guides users toward the intended action.

BEFORE

The MVP only supported single-label verification, but since each product contained multiple labels, users found it inefficient to verify them one by one.

AFTER

Introduced two new mass verification tabs to support users who needed to review multiple labels at once.

07.

FINAL ITERATION

UX Writing IMPROVEMENT

While U.S. users appreciated the tool’s value, testing revealed friction in understanding certain terms and labels. I refined the UX writing — simplifying language and clarifying functions — to create a smoother, more intuitive experience.

08.

RESULTS AND OVERALL IMPACT

The first version of MAL rolled out across select LG teams — and the results spoke for themselves.

While the pilot proved its value through clear efficiency gains, the deeper impact was strategic. It set the foundation for responsible AI scaling across LG, with MAL defining the tone, rules, and workflows that would guide future automation efforts.

Identified impact during pilot

134 Error Detected

The system detected 134 errors — nearly 3× more than the 51 found through manual review.

380 Pre-ship Errors

In 2022, 37 errors were caught pre-shipment; in 2023, the pilot flagged 380, proving the system’s effectiveness.

Additional Impact after roll out

50% Time Saved

The tool reduced back-and-forth revisions by 50%, enabling teams to deliver polished content faster.

Cultural Shift

Teams that initially resisted AI began to trust it once they experienced how much it reduced their workload.

09.

REFLECTION

This project taught me that designing at enterprise scale is as much about people as it is about tools. I learned to navigate global workflows and balance complexity with simplicity — building a system powerful enough to scale, yet intuitive enough to adopt. I also saw how true alignment requires patience and clear communication, especially when priorities differ across teams and time zones. Most importantly, I came to understand the trade-offs of AI in practice: while it can accelerate reviews, cultural nuance still demands human judgment.

In the end, the real challenge wasn’t just building a tool — it was building trust in the system around it.

The project was later recognized with an internal AI Innovation Award, acknowledged for improving workflows across global teams and demonstrating measurable impact at an organizational level.

More works

UX Research

Health Tech

Mobile

PRODUCT DESIGN | SERVICE DESIGN

Reframed outdated user assumptions and redesigned the app → empowering older Korean patients to track progress and engage actively in their care.

Shipped 2025

0 → 1

P2P Marketplace

PRODUCT DESIGN | STRATEGY (MOBILE)

Coming soon